Realtime ASR + Rubberband

Contents

Realtime ASR + Rubberband#

To improve Realtime ASR, you can stretch voice output using pyrubberband.

This tutorial is available as an IPython notebook at malaya-speech/example/realtime-asr.

This module is not language independent, so it not save to use on different languages. Pretrained models trained on hyperlocal languages.

This is an application of malaya-speech Pipeline, read more about malaya-speech Pipeline at malaya-speech/example/pipeline.

[1]:

import malaya_speech

from malaya_speech import Pipeline

Load VAD model#

We are going to use WebRTC VAD model, read more about VAD at https://malaya-speech.readthedocs.io/en/latest/load-vad.html

[2]:

vad_model = malaya_speech.vad.webrtc()

Recording interface#

So, to start recording audio including realtime VAD and Classification, we need to use malaya_speech.streaming.record. We use pyaudio library as the backend.

def record(

vad,

asr_model = None,

classification_model = None,

device = None,

input_rate: int = 16000,

sample_rate: int = 16000,

blocks_per_second: int = 50,

padding_ms: int = 300,

ratio: float = 0.75,

min_length: float = 0.1,

filename: str = None,

spinner: bool = False,

):

"""

Record an audio using pyaudio library. This record interface required a VAD model.

Parameters

----------

vad: object

vad model / pipeline.

asr_model: object

ASR model / pipeline, will transcribe each subsamples realtime.

classification_model: object

classification pipeline, will classify each subsamples realtime.

device: None

`device` parameter for pyaudio, check available devices from `sounddevice.query_devices()`.

input_rate: int, optional (default = 16000)

sample rate from input device, this will auto resampling.

sample_rate: int, optional (default = 16000)

output sample rate.

blocks_per_second: int, optional (default = 50)

size of frame returned from pyaudio, frame size = sample rate / (blocks_per_second / 2).

50 is good for WebRTC, 30 or less is good for Malaya Speech VAD.

padding_ms: int, optional (default = 300)

size of queue to store frames, size = padding_ms // (1000 * blocks_per_second // sample_rate)

ratio: float, optional (default = 0.75)

if 75% of the queue is positive, assumed it is a voice activity.

min_length: float, optional (default=0.1)

minimum length (s) to accept a subsample.

filename: str, optional (default=None)

if None, will auto generate name based on timestamp.

spinner: bool, optional (default=False)

if True, will use spinner object from halo library.

Returns

-------

result : [filename, samples]

"""

pyaudio will returned int16 bytes, so we need to change to numpy array, normalize it to -1 and +1 floating point.

Check available devices#

[3]:

import sounddevice

sounddevice.query_devices()

[3]:

> 0 External Microphone, Core Audio (1 in, 0 out)

< 1 External Headphones, Core Audio (0 in, 2 out)

2 MacBook Pro Microphone, Core Audio (1 in, 0 out)

3 MacBook Pro Speakers, Core Audio (0 in, 2 out)

4 JustStream Audio Driver, Core Audio (2 in, 2 out)

By default it will use 0 index.

Load ASR model#

[4]:

model = malaya_speech.stt.deep_transducer(model = 'conformer')

Test pyrubberband#

[5]:

import pyrubberband as pyrb

import IPython.display as ipd

[6]:

y, sr = malaya_speech.load('speech/example-speaker/husein-zolkepli.wav')

y_stretch = pyrb.time_stretch(y, sr, 0.8)

[7]:

ipd.Audio(y, rate = sr)

[7]:

[8]:

ipd.Audio(y_stretch, rate = sr)

[8]:

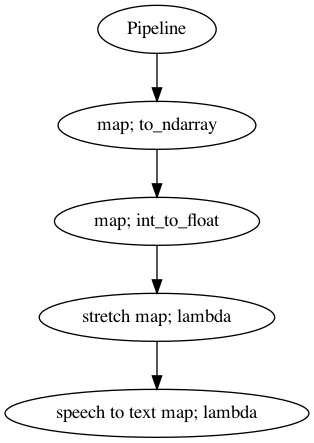

ASR Pipeline#

Because pyaudio will returned int16 bytes, so we need to change to numpy array then normalize to float, feel free to add speech enhancement or any function, but in this example, I just keep it simple.

[9]:

sr = 16000

stretch_ratio = 0.85

p_asr = Pipeline()

pipeline_asr = (

p_asr.map(malaya_speech.astype.to_ndarray)

.map(malaya_speech.astype.int_to_float)

.map(lambda x: pyrb.time_stretch(x, sr, stretch_ratio), name = 'stretch')

.map(lambda x: model(x), name = 'speech-to-text')

)

p_asr.visualize()

[9]:

You need to make sure the last output should named as ``speech-to-text`` or else the realtime engine will throw an error.

Start Recording#

Again, once you start to run the code below, it will straight away recording your voice.

If you run in jupyter notebook, press button stop up there to stop recording, if in terminal, press CTRL + c.

[10]:

file, samples = malaya_speech.streaming.record(vad = vad_model, asr_model = p_asr, spinner = False,

filename = 'realtime-asr-rubberband.wav')

file

Listening (ctrl-C to stop recording) ...

Sample 0 2021-06-12 11:03:39.804085: helo nama saya hussein

Sample 1 2021-06-12 11:03:40.041678: hari ini saya mahu cakap tentang bohong

Sample 2 2021-06-12 11:03:40.274277: apakah itu bon

Sample 3 2021-06-12 11:03:40.543731: yalah pinjaman yang dikeluarkan oleh

Sample 4 2021-06-12 11:03:42.442084: atau kerajaan

Sample 5 2021-06-12 11:03:46.897266: tak ada investor dengan bunga setiap tahun

Sample 6 2021-06-12 11:03:51.883495: enam bulan tiga bulan bergantung kepada bank dan akan ada tempoh mata

Sample 7 2021-06-12 11:03:54.447205: terima kasih

saved audio to realtime-asr-rubberband.wav

[10]:

'realtime-asr-rubberband.wav'

the wav file can get at malaya-speech/speech/record.

Actually it is pretty nice. As you can see, it able to transcribe realtime, you can try it by yourself.

[11]:

import IPython.display as ipd

ipd.Audio(file)

[11]:

[12]:

type(samples[6][0]), samples[6][1]

[12]:

(bytearray,

'enam bulan tiga bulan bergantung kepada bank dan akan ada tempoh mata')

[13]:

y = malaya_speech.utils.astype.to_ndarray(samples[6][0])

ipd.Audio(y, rate = 16000)

[13]: