Classification stacking

Contents

Classification stacking#

This tutorial is available as an IPython notebook at malaya-speech/example/classification-stacking.

This module is language independent, so it save to use on different languages. Pretrained models trained on multilanguages.

This is an application of malaya-speech Pipeline, read more about malaya-speech Pipeline at malaya-speech/example/pipeline.

Why Stacking?#

Sometime a single model is not good enough. So, you need to use multiple models to get a better result! It called stacking.

In this example, I am going to use gender detection module.

[1]:

import malaya_speech

import numpy as np

from malaya_speech import Pipeline

[2]:

y, sr = malaya_speech.load('speech/video/The-Singaporean-White-Boy.wav')

len(y), sr

[2]:

(1634237, 16000)

[3]:

# just going to take 30 seconds

y = y[:sr * 30]

[4]:

import IPython.display as ipd

ipd.Audio(y, rate = sr)

[4]:

List available deep model#

[6]:

malaya_speech.gender.available_model()

INFO:root:last accuracy during training session before early stopping.

[6]:

| Size (MB) | Quantized Size (MB) | Accuracy | |

|---|---|---|---|

| vggvox-v2 | 31.1 | 7.92 | 0.9756 |

| deep-speaker | 96.9 | 24.40 | 0.9455 |

Load deep model#

def deep_model(model: str = 'vggvox-v2', quantized: bool = False, **kwargs):

"""

Load gender detection deep model.

Parameters

----------

model : str, optional (default='vggvox-v2')

Model architecture supported. Allowed values:

* ``'vggvox-v2'`` - finetuned VGGVox V2.

* ``'deep-speaker'`` - finetuned Deep Speaker.

quantized : bool, optional (default=False)

if True, will load 8-bit quantized model.

Quantized model not necessary faster, totally depends on the machine.

Returns

-------

result : malaya_speech.supervised.classification.load function

"""

[7]:

vggvox_v2 = malaya_speech.gender.deep_model(model = 'vggvox-v2')

deep_speaker = malaya_speech.gender.deep_model(model = 'deep-speaker')

How to classify genders in an audio sample#

So we are going to use VAD to help us. Instead we are going to classify as a whole sample, we chunk it into multiple small samples and classify it.

[8]:

vad = malaya_speech.vad.deep_model(model = 'vggvox-v2')

[9]:

%%time

frames = list(malaya_speech.utils.generator.frames(y, 30, sr))

CPU times: user 1.08 ms, sys: 66 µs, total: 1.14 ms

Wall time: 1.15 ms

[10]:

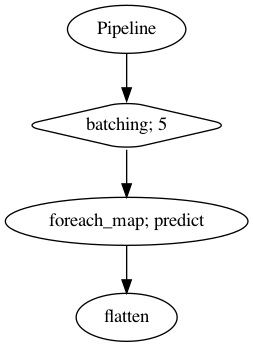

p = Pipeline()

pipeline = (

p.batching(5)

.foreach_map(vad.predict)

.flatten()

)

p.visualize()

[10]:

[11]:

%%time

result = p.emit(frames)

result.keys()

/Users/huseinzolkepli/Documents/tf-1.15/env/lib/python3.7/site-packages/librosa/core/spectrum.py:224: UserWarning: n_fft=512 is too small for input signal of length=480

n_fft, y.shape[-1]

CPU times: user 32.6 s, sys: 6.28 s, total: 38.9 s

Wall time: 9 s

[11]:

dict_keys(['batching', 'predict', 'flatten'])

[12]:

frames_vad = [(frame, result['flatten'][no]) for no, frame in enumerate(frames)]

grouped_vad = malaya_speech.utils.group.group_frames(frames_vad)

grouped_vad = malaya_speech.utils.group.group_frames_threshold(grouped_vad, threshold_to_stop = 0.3)

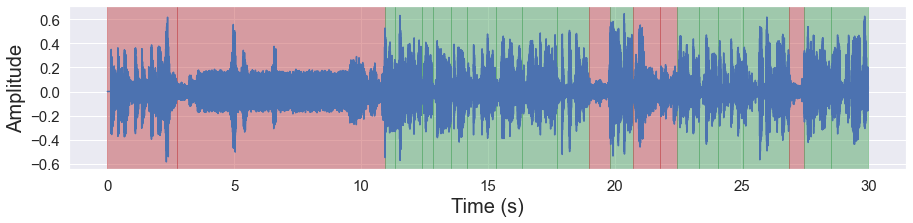

[13]:

malaya_speech.extra.visualization.visualize_vad(y, grouped_vad, sr, figsize = (15, 3))

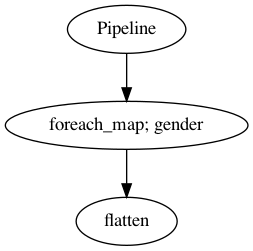

[14]:

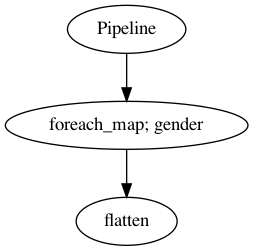

p_vggvox_v2 = Pipeline()

pipeline = (

p_vggvox_v2.foreach_map(vggvox_v2)

.flatten()

)

p_vggvox_v2.visualize()

[14]:

[15]:

p_deep_speaker = Pipeline()

pipeline = (

p_deep_speaker.foreach_map(deep_speaker)

.flatten()

)

p_deep_speaker.visualize()

[15]:

Stacking interface#

def classification_stack(models):

"""

Stacking for classification models. All models should be in the same domain classification.

Parameters

----------

models: List[Callable]

list of models.

Returns

-------

result: malaya_speech.stack.Stack class

"""

def predict_proba(self, inputs, aggregate: Callable = gmean):

"""

Stacking for predictive models, will return probability.

Parameters

----------

inputs: List[np.array]

aggregate : Callable, optional (default=scipy.stats.mstats.gmean)

Aggregate function.

Returns

-------

result: np.array

"""

def predict(self, inputs, aggregate: Callable = gmean):

"""

Stacking for predictive models, will return labels.

Parameters

----------

inputs: List[np.array]

aggregate : Callable, optional (default=scipy.stats.mstats.gmean)

Aggregate function.

Returns

-------

result: List[str]

"""

By default, aggregated function for stacking is scipy.stats.mstats.gmean.

[16]:

gender_stack = malaya_speech.stack.classification_stack([vggvox_v2, vggvox_v2, deep_speaker])

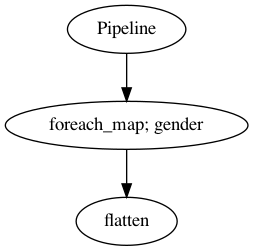

[17]:

p_stacking = Pipeline()

pipeline = (

p_stacking.foreach_map(gender_stack)

.flatten()

)

p_stacking.visualize()

[17]:

[18]:

%%time

samples_vad = [g[0] for g in grouped_vad]

result_vggvox_v2 = p_vggvox_v2.emit(samples_vad)

result_vggvox_v2.keys()

CPU times: user 5.38 s, sys: 1.3 s, total: 6.68 s

Wall time: 2.3 s

[18]:

dict_keys(['gender', 'flatten'])

[20]:

%%time

samples_vad = [g[0] for g in grouped_vad]

result_deep_speaker = p_deep_speaker.emit(samples_vad)

result_deep_speaker.keys()

CPU times: user 4.14 s, sys: 514 ms, total: 4.65 s

Wall time: 851 ms

[20]:

dict_keys(['gender', 'flatten'])

[21]:

%%time

samples_vad = [g[0] for g in grouped_vad]

result_stacking = p_stacking.emit(samples_vad)

result_stacking.keys()

CPU times: user 14.9 s, sys: 2.82 s, total: 17.7 s

Wall time: 3.14 s

[21]:

dict_keys(['gender', 'flatten'])

[23]:

samples_vad_vggvox_v2 = [(frame, result_vggvox_v2['flatten'][no]) for no, frame in enumerate(samples_vad)]

samples_vad_vggvox_v2

[23]:

[(<malaya_speech.model.frame.Frame at 0x14d50fed0>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d526110>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5260d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526190>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d5261d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526250>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526290>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d5262d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526350>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526310>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526210>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526390>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5263d0>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526410>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526450>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5264d0>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526490>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526550>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526590>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526510>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d526610>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d5265d0>, 'female')]

[24]:

samples_vad_deep_speaker = [(frame, result_deep_speaker['flatten'][no]) for no, frame in enumerate(samples_vad)]

samples_vad_deep_speaker

[24]:

[(<malaya_speech.model.frame.Frame at 0x14d50fed0>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d526110>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5260d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526190>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d5261d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526250>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526290>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d5262d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526350>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526310>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526210>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526390>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5263d0>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526410>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526450>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d5264d0>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526490>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526550>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526590>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526510>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d526610>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d5265d0>, 'female')]

[25]:

samples_vad_stacking = [(frame, result_stacking['flatten'][no]) for no, frame in enumerate(samples_vad)]

samples_vad_stacking

[25]:

[(<malaya_speech.model.frame.Frame at 0x14d50fed0>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d526110>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5260d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526190>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d5261d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526250>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526290>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d5262d0>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526350>, 'male'),

(<malaya_speech.model.frame.Frame at 0x14d526310>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526210>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526390>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5263d0>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526410>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526450>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d5264d0>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526490>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526550>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526590>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d526510>, 'not a gender'),

(<malaya_speech.model.frame.Frame at 0x14d526610>, 'female'),

(<malaya_speech.model.frame.Frame at 0x14d5265d0>, 'female')]

[26]:

import matplotlib.pyplot as plt

[ ]:

nrows = 4

fig, ax = plt.subplots(nrows = nrows, ncols = 1)

fig.set_figwidth(20)

fig.set_figheight(nrows * 3)

malaya_speech.extra.visualization.visualize_vad(y, grouped_vad, sr, ax = ax[0])

malaya_speech.extra.visualization.plot_classification(samples_vad_vggvox_v2,

'emotion detection vggvox v2', ax = ax[1])

malaya_speech.extra.visualization.plot_classification(samples_vad_deep_speaker,

'emotion detection deep speaker', ax = ax[2])

malaya_speech.extra.visualization.plot_classification(samples_vad_stacking,

'emotion detection stacking', ax = ax[3])

fig.tight_layout()

plt.show()