Force Alignment using Transducer PyTorch

Contents

Force Alignment using Transducer PyTorch#

Forced alignment is a technique to take an orthographic transcription of an audio file and generate a time-aligned version.

This tutorial is available as an IPython notebook at malaya-speech/example/force-alignment-transducer-pt.

This module is not language independent, so it not save to use on different languages. Pretrained models trained on hyperlocal languages.

[1]:

import malaya_speech

import numpy as np

from malaya_speech import Pipeline

import IPython.display as ipd

import matplotlib.pyplot as plt

from malaya_speech.utils.aligner import plot_alignments

`pyaudio` is not available, `malaya_speech.streaming.pyaudio` is not able to use.

List available Force Aligner model#

[2]:

malaya_speech.force_alignment.transducer.available_pt_transformer()

[2]:

| Size (MB) | malay-malaya | malay-fleur102 | Language | singlish | |

|---|---|---|---|---|---|

| mesolitica/conformer-tiny | 38.5 | {'WER': 0.17341180814, 'CER': 0.05957485024} | {'WER': 0.19524478979, 'CER': 0.0830808938} | [malay] | NaN |

| mesolitica/conformer-base | 121 | {'WER': 0.122076123261, 'CER': 0.03879606324} | {'WER': 0.1326737206665, 'CER': 0.05032914857} | [malay] | NaN |

| mesolitica/conformer-medium | 243 | {'WER': 0.1054817492564, 'CER': 0.0313518992842} | {'WER': 0.1172708897486, 'CER': 0.0431050488} | [malay] | NaN |

| mesolitica/emformer-base | 162 | {'WER': 0.175762423786, 'CER': 0.06233919000537} | {'WER': 0.18303839134, 'CER': 0.0773853362} | [malay] | NaN |

| mesolitica/conformer-base-singlish | 121 | NaN | NaN | [singlish] | {'WER': 0.06517537334361, 'CER': 0.03265430876} |

| mesolitica/conformer-medium-mixed | 243 | {'WER': 0.111166517935, 'CER': 0.03410958328} | {'WER': 0.108354748, 'CER': 0.037785722} | [malay, singlish] | {'WER': 0.091969755225, 'CER': 0.044627194623} |

Load Transducer Aligner model#

def pt_transformer(

model: str = 'mesolitica/conformer-base',

**kwargs,

):

"""

Load Encoder-Transducer ASR model using Pytorch.

Parameters

----------

model : str, optional (default='mesolitica/conformer-base')

Check available models at `malaya_speech.force_alignment.transducer.available_pt_transformer()`.

Returns

-------

result : malaya_speech.torch_model.torchaudio.ForceAlignment class

"""

[3]:

model = malaya_speech.force_alignment.transducer.pt_transformer(model = 'mesolitica/conformer-medium-mixed')

Load sample#

Malay samples#

[4]:

malay1, sr = malaya_speech.load('speech/example-speaker/shafiqah-idayu.wav')

malay2, sr = malaya_speech.load('speech/example-speaker/haqkiem.wav')

[5]:

texts = ['nama saya shafiqah idayu',

'sebagai pembangkang yang matang dan sejahtera pas akan menghadapi pilihan raya umum dan tidak menumbang kerajaan dari pintu belakang']

[6]:

ipd.Audio(malay2, rate = sr)

[6]:

Singlish samples#

[7]:

import json

import os

from glob import glob

with open('speech/imda/output.json') as fopen:

data = json.load(fopen)

data

[7]:

{'221931702.WAV': 'wantan mee is a traditional local cuisine',

'221931818.WAV': 'ahmad khan adelene wee chin suan and robert ibbetson',

'221931727.WAV': 'saravanan gopinathan george yeo yong boon and tay kheng soon'}

[8]:

wavs = glob('speech/imda/*.WAV')

wavs

[8]:

['speech/imda/221931727.WAV',

'speech/imda/221931818.WAV',

'speech/imda/221931702.WAV']

[9]:

y, sr = malaya_speech.load(wavs[0])

[10]:

ipd.Audio(y, rate = sr)

[10]:

Predict#

def predict(self, input, transcription: str, temperature: float = 1.0):

"""

Transcribe input, will return a string.

Parameters

----------

input: np.array

np.array or malaya_speech.model.frame.Frame.

transcription: str

transcription of input audio

temperature: float, optional (default=1.0)

temperature for logits.

Returns

-------

result: Dict[words_alignment, subwords_alignment, subwords, alignment]

"""

Predict Malay#

Our original text is: ‘sebagai pembangkang yang matang dan sejahtera pas akan menghadapi pilihan raya umum dan tidak menumbang kerajaan dari pintu belakang’

[11]:

results = model.predict(malay2, texts[1])

[12]:

results.keys()

[12]:

dict_keys(['words_alignment', 'subwords_alignment', 'subwords', 'alignment'])

[13]:

results['words_alignment']

[13]:

[{'text': 'sebagai',

'start': 0.07991686893203884,

'end': 0.11987530339805826,

'start_t': 0,

'end_t': 0,

'score': 0.8652574},

{'text': 'pembangkang',

'start': 0.5594180825242718,

'end': 1.0389192961165048,

'start_t': 2,

'end_t': 4,

'score': 2.2811141e-06},

{'text': 'yang',

'start': 1.158794599514563,

'end': 1.1987530339805823,

'start_t': 5,

'end_t': 5,

'score': 4.3430943e-09},

{'text': 'matang',

'start': 1.3985452063106798,

'end': 1.7182126820388348,

'start_t': 6,

'end_t': 7,

'score': 4.1879557e-06},

{'text': 'dan',

'start': 1.798129550970874,

'end': 1.8380879854368932,

'start_t': 7,

'end_t': 8,

'score': 1.9011324e-05},

{'text': 'sejahtera',

'start': 2.0378801577669905,

'end': 2.477422936893204,

'start_t': 8,

'end_t': 10,

'score': 2.5778693e-06},

{'text': 'pas',

'start': 2.7970904126213596,

'end': 2.996882584951457,

'start_t': 12,

'end_t': 12,

'score': 2.9329883e-10},

{'text': 'akan',

'start': 3.1167578883495146,

'end': 3.156716322815534,

'start_t': 13,

'end_t': 13,

'score': 1.2522179e-06},

{'text': 'menghadapi',

'start': 3.396466929611651,

'end': 3.796051274271845,

'start_t': 14,

'end_t': 16,

'score': 1.6652191e-05},

{'text': 'pilihan',

'start': 4.035801881067961,

'end': 4.395427791262136,

'start_t': 17,

'end_t': 18,

'score': 5.8163936e-09},

{'text': 'raya',

'start': 4.515303094660195,

'end': 4.555261529126214,

'start_t': 19,

'end_t': 19,

'score': 1.4073122e-09},

{'text': 'umum',

'start': 4.83497057038835,

'end': 5.194596480582525,

'start_t': 20,

'end_t': 21,

'score': 9.590183e-08},

{'text': 'dan',

'start': 5.314471783980583,

'end': 5.354430218446602,

'start_t': 22,

'end_t': 22,

'score': 0.032095414},

{'text': 'tidak',

'start': 5.514263956310679,

'end': 5.554222390776698,

'start_t': 23,

'end_t': 23,

'score': 9.107502e-08},

{'text': 'menumbang',

'start': 5.833931432038835,

'end': 6.113640473300971,

'start_t': 24,

'end_t': 25,

'score': 6.4145235e-07},

{'text': 'kerajaan',

'start': 6.313432645631068,

'end': 6.353391080097087,

'start_t': 26,

'end_t': 26,

'score': 2.017659e-07},

{'text': 'dari',

'start': 6.992726031553398,

'end': 7.032684466019417,

'start_t': 29,

'end_t': 29,

'score': 7.6962095e-07},

{'text': 'pintu',

'start': 7.312393507281554,

'end': 7.352351941747573,

'start_t': 30,

'end_t': 30,

'score': 1.0205139e-06},

{'text': 'belakang',

'start': 7.672019417475729,

'end': 7.711977851941748,

'start_t': 32,

'end_t': 32,

'score': 2.4569164e-07}]

[14]:

len(results['subwords'])

[14]:

34

[15]:

results['alignment'].shape

[15]:

(34, 34)

[16]:

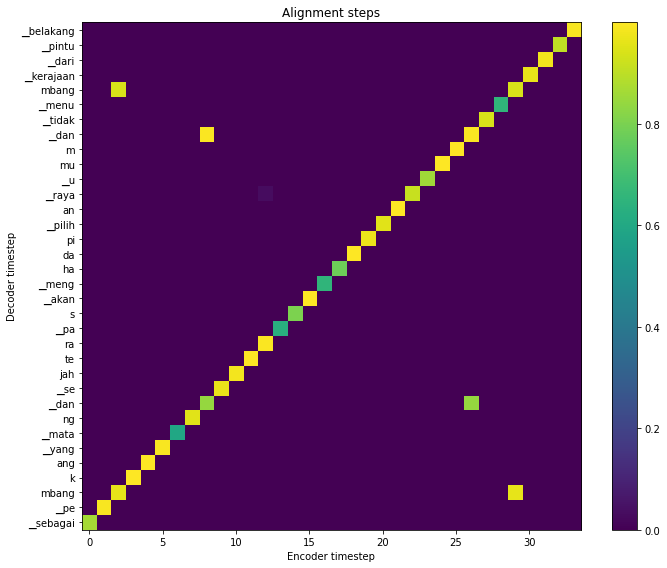

fig = plt.figure(figsize=(10, 8))

ax = fig.add_subplot(111)

ax.set_title('Alignment steps')

im = ax.imshow(

results['alignment'],

aspect='auto',

origin='lower',

interpolation='none')

ax.set_yticks(range(len(results['subwords'])))

labels = [item.get_text() for item in ax.get_yticklabels()]

ax.set_yticklabels(results['subwords'])

fig.colorbar(im, ax=ax)

xlabel = 'Encoder timestep'

plt.xlabel(xlabel)

plt.ylabel('Decoder timestep')

plt.tight_layout()

plt.show()

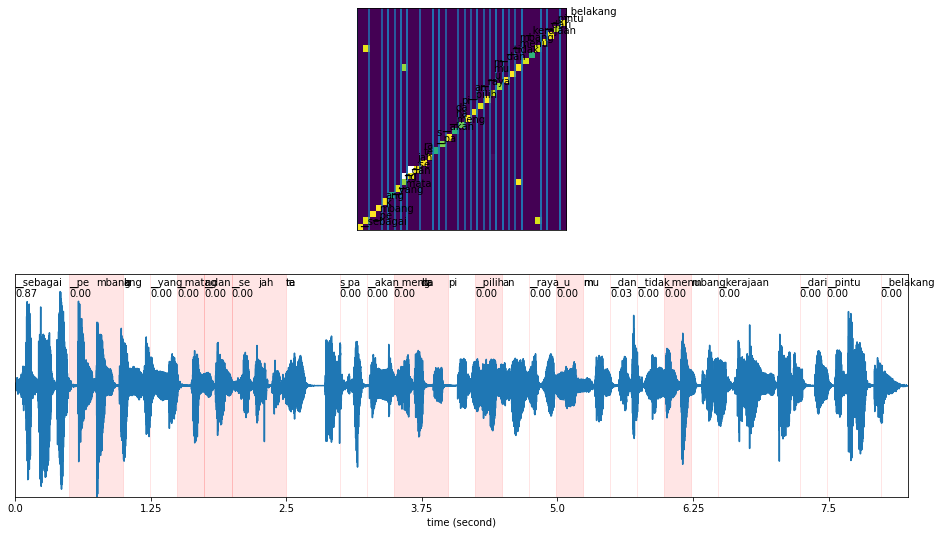

[17]:

plot_alignments(alignment = results['alignment'],

subs_alignment = results['subwords_alignment'],

words_alignment = results['words_alignment'],

waveform = malay2,

sample_rate = 16000,

figsize = (16, 9))

Predict Singlish#

Our original text is: ‘saravanan gopinathan george yeo yong boon and tay kheng soon’

[20]:

results = model.predict(y, data[os.path.split(wavs[0])[1]])

[21]:

results.keys()

[21]:

dict_keys(['words_alignment', 'subwords_alignment', 'subwords', 'alignment'])

[22]:

results['words_alignment']

[22]:

[{'text': 'saravanan',

'start': 0.8410552763819095,

'end': 1.5219095477386935,

'start_t': 3,

'end_t': 6,

'score': 9.532287e-07},

{'text': 'gopinathan',

'start': 1.8423115577889446,

'end': 2.6032663316582916,

'start_t': 7,

'end_t': 10,

'score': 1.789202e-06},

{'text': 'george',

'start': 3.364221105527638,

'end': 3.6045226130653267,

'start_t': 13,

'end_t': 14,

'score': 1.8525572e-05},

{'text': 'yeo',

'start': 3.844824120603015,

'end': 4.045075376884422,

'start_t': 15,

'end_t': 16,

'score': 1.0203461e-05},

{'text': 'yong',

'start': 4.1652261306532665,

'end': 4.365477386934674,

'start_t': 16,

'end_t': 17,

'score': 1.0203461e-05},

{'text': 'boon',

'start': 4.685879396984925,

'end': 4.926180904522613,

'start_t': 18,

'end_t': 19,

'score': 1.3545392e-07},

{'text': 'and',

'start': 5.727185929648241,

'end': 5.767236180904522,

'start_t': 22,

'end_t': 22,

'score': 3.4447204e-08},

{'text': 'tay',

'start': 6.007537688442211,

'end': 6.207788944723618,

'start_t': 23,

'end_t': 24,

'score': 5.423156e-07},

{'text': 'kheng',

'start': 6.28788944723618,

'end': 6.488140703517588,

'start_t': 24,

'end_t': 25,

'score': 1.647781e-06},

{'text': 'soon',

'start': 6.568241206030151,

'end': 6.848592964824121,

'start_t': 26,

'end_t': 27,

'score': 9.646859e-07}]

[23]:

results['subwords_alignment']

[23]:

[{'text': '▁sa',

'start': 0.8410552763819095,

'end': 0.8811055276381908,

'start_t': 3,

'end_t': 3,

'score': 1.2220553e-07},

{'text': 'ra',

'start': 1.081356783919598,

'end': 1.1214070351758796,

'start_t': 4,

'end_t': 4,

'score': 9.243605e-08},

{'text': 'v',

'start': 1.201507537688442,

'end': 1.2415577889447236,

'start_t': 5,

'end_t': 5,

'score': 1.0656663e-08},

{'text': 'an',

'start': 1.3216582914572863,

'end': 1.3617085427135678,

'start_t': 5,

'end_t': 5,

'score': 5.4261122e-08},

{'text': 'an',

'start': 1.481859296482412,

'end': 1.5219095477386935,

'start_t': 6,

'end_t': 6,

'score': 8.020109e-06},

{'text': '▁go',

'start': 1.8423115577889446,

'end': 1.882361809045226,

'start_t': 7,

'end_t': 7,

'score': 3.209235e-06},

{'text': 'p',

'start': 1.962462311557789,

'end': 2.0025125628140703,

'start_t': 8,

'end_t': 8,

'score': 2.0064768e-08},

{'text': 'in',

'start': 2.1226633165829143,

'end': 2.1627135678391958,

'start_t': 8,

'end_t': 8,

'score': 6.117405e-08},

{'text': 'at',

'start': 2.2428140703517587,

'end': 2.28286432160804,

'start_t': 9,

'end_t': 9,

'score': 1.5955281e-06},

{'text': 'han',

'start': 2.56321608040201,

'end': 2.6032663316582916,

'start_t': 10,

'end_t': 10,

'score': 1.7622291e-08},

{'text': '▁ge',

'start': 3.364221105527638,

'end': 3.4042713567839193,

'start_t': 13,

'end_t': 13,

'score': 0.000544741},

{'text': 'or',

'start': 3.4843718592964823,

'end': 3.5244221105527638,

'start_t': 14,

'end_t': 14,

'score': 2.721273e-07},

{'text': 'ge',

'start': 3.5644723618090453,

'end': 3.6045226130653267,

'start_t': 14,

'end_t': 14,

'score': 4.555813e-06},

{'text': '▁',

'start': 3.844824120603015,

'end': 3.8848743718592966,

'start_t': 15,

'end_t': 15,

'score': 1.9964894e-07},

{'text': 'y',

'start': 3.884874371859296,

'end': 3.9249246231155777,

'start_t': 15,

'end_t': 15,

'score': 8.268911e-06},

{'text': 'e',

'start': 3.964974874371859,

'end': 4.005025125628141,

'start_t': 15,

'end_t': 16,

'score': 0.99506426},

{'text': 'o',

'start': 4.005025125628141,

'end': 4.045075376884422,

'start_t': 16,

'end_t': 16,

'score': 0.67549676},

{'text': '▁',

'start': 4.1652261306532665,

'end': 4.205276381909548,

'start_t': 16,

'end_t': 16,

'score': 4.2439947e-06},

{'text': 'y',

'start': 4.205276381909548,

'end': 4.2453266331658295,

'start_t': 16,

'end_t': 17,

'score': 0.0015577499},

{'text': 'ong',

'start': 4.325427135678392,

'end': 4.365477386934674,

'start_t': 17,

'end_t': 17,

'score': 6.180808e-08},

{'text': '▁bo',

'start': 4.685879396984925,

'end': 4.725929648241206,

'start_t': 18,

'end_t': 18,

'score': 4.90003e-07},

{'text': 'on',

'start': 4.886130653266331,

'end': 4.926180904522613,

'start_t': 19,

'end_t': 19,

'score': 4.4304317e-05},

{'text': '▁and',

'start': 5.727185929648241,

'end': 5.767236180904522,

'start_t': 22,

'end_t': 22,

'score': 0.95396453},

{'text': '▁ta',

'start': 6.007537688442211,

'end': 6.047587939698492,

'start_t': 23,

'end_t': 24,

'score': 0.99530405},

{'text': 'y',

'start': 6.167738693467337,

'end': 6.207788944723618,

'start_t': 24,

'end_t': 24,

'score': 0.999545},

{'text': '▁k',

'start': 6.28788944723618,

'end': 6.327939698492462,

'start_t': 24,

'end_t': 25,

'score': 0.38012785},

{'text': 'h',

'start': 6.367989949748743,

'end': 6.408040201005025,

'start_t': 25,

'end_t': 25,

'score': 0.000104434235},

{'text': 'e',

'start': 6.408040201005025,

'end': 6.448090452261306,

'start_t': 25,

'end_t': 25,

'score': 1.6290902e-06},

{'text': 'ng',

'start': 6.448090452261306,

'end': 6.488140703517588,

'start_t': 25,

'end_t': 25,

'score': 4.857545e-07},

{'text': '▁so',

'start': 6.568241206030151,

'end': 6.608291457286432,

'start_t': 26,

'end_t': 26,

'score': 1.017138e-07},

{'text': 'on',

'start': 6.80854271356784,

'end': 6.848592964824121,

'start_t': 26,

'end_t': 27,

'score': 7.557719e-06}]

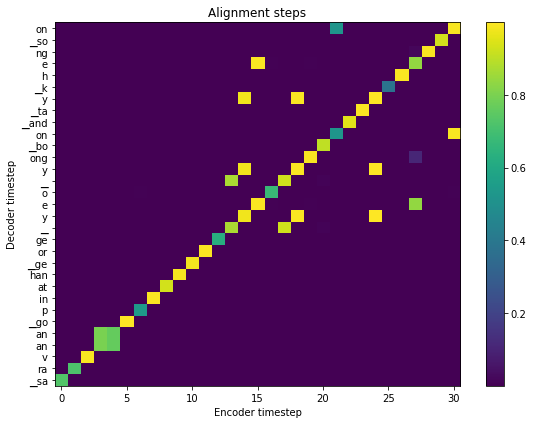

[24]:

fig = plt.figure(figsize=(8, 6))

ax = fig.add_subplot(111)

ax.set_title('Alignment steps')

im = ax.imshow(

results['alignment'].T,

aspect='auto',

origin='lower',

interpolation='none')

ax.set_yticks(range(len(results['subwords'])))

labels = [item.get_text() for item in ax.get_yticklabels()]

ax.set_yticklabels(results['subwords'])

fig.colorbar(im, ax=ax)

xlabel = 'Encoder timestep'

plt.xlabel(xlabel)

plt.ylabel('Decoder timestep')

plt.tight_layout()

plt.show()

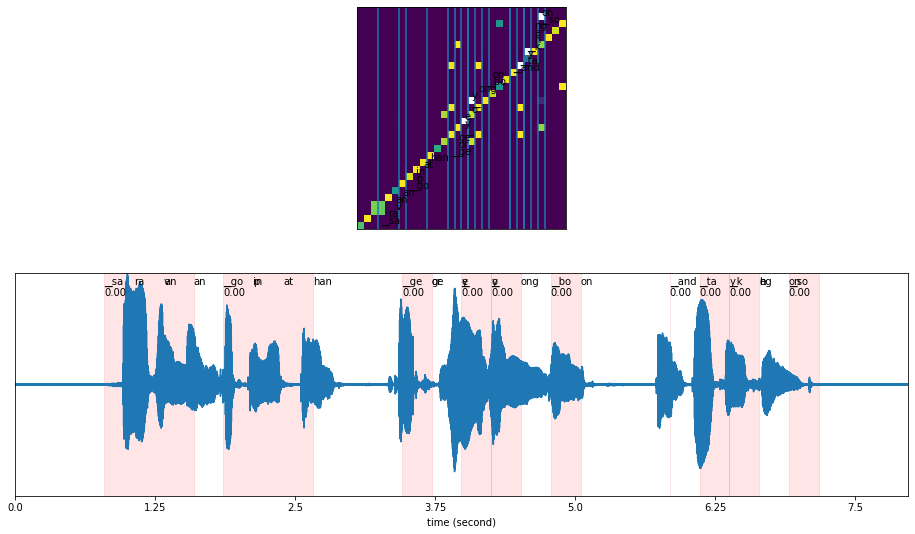

[25]:

plot_alignments(alignment = results['alignment'],

subs_alignment = results['subwords_alignment'],

words_alignment = results['words_alignment'],

waveform = y,

sample_rate = 16000,

figsize = (16, 9))

What if we give wrong transcription?#

[27]:

results = model.predict(y, 'husein sangat comel')

results

[27]:

{'words_alignment': [{'text': 'husein',

'start': 0.8410552763819095,

'end': 1.281608040201005,

'start_t': 1,

'end_t': 1,

'score': 9.416054e-08},

{'text': 'sangat',

'start': 2.2828643216080398,

'end': 2.3229145728643212,

'start_t': 2,

'end_t': 2,

'score': 6.818997e-07},

{'text': 'comel',

'start': 3.324170854271357,

'end': 3.8848743718592966,

'start_t': 3,

'end_t': 3,

'score': 1.3340428e-05}],

'subwords_alignment': [{'text': '▁hu',

'start': 0.8410552763819095,

'end': 0.8811055276381908,

'start_t': 1,

'end_t': 1,

'score': 9.416054e-08},

{'text': 'se',

'start': 1.1214070351758794,

'end': 1.1614572864321608,

'start_t': 1,

'end_t': 1,

'score': 4.8340604e-07},

{'text': 'in',

'start': 1.2415577889447236,

'end': 1.281608040201005,

'start_t': 1,

'end_t': 1,

'score': 4.0840082e-09},

{'text': '▁sangat',

'start': 2.2828643216080398,

'end': 2.3229145728643212,

'start_t': 2,

'end_t': 2,

'score': 2.5190607e-06},

{'text': '▁co',

'start': 3.324170854271357,

'end': 3.3642211055276383,

'start_t': 3,

'end_t': 3,

'score': 0.00043028969},

{'text': 'me',

'start': 3.5644723618090453,

'end': 3.6045226130653267,

'start_t': 3,

'end_t': 3,

'score': 0.00030472153},

{'text': 'l',

'start': 3.844824120603015,

'end': 3.8848743718592966,

'start_t': 3,

'end_t': 3,

'score': 4.8931256e-06}],

'subwords': ['▁hu', 'se', 'in', '▁sangat', '▁co', 'me', 'l'],

'alignment': array([[2.72694809e-08, 1.46691752e-06, 2.72644751e-09, 7.75767646e-08,

2.31194292e-07, 1.09011422e-08, 1.23739765e-08],

[9.41605407e-08, 4.83406041e-07, 4.08400824e-09, 1.76549584e-08,

2.92625117e-07, 2.61610573e-08, 8.27328950e-06],

[3.89696273e-04, 6.81899678e-07, 1.51673867e-05, 2.51906067e-06,

4.52378590e-05, 6.25562825e-06, 7.36032205e-04],

[2.27073040e-02, 1.52006196e-05, 1.33404283e-05, 2.23881052e-05,

4.30289685e-04, 3.04721529e-04, 4.89312561e-06],

[3.43373443e-07, 4.62461092e-09, 1.42082008e-08, 3.31597818e-07,

1.25402551e-07, 2.50174423e-08, 7.87086810e-06],

[1.73348526e-05, 6.10900361e-06, 1.20940858e-05, 3.23311951e-06,

1.60034288e-05, 2.12415078e-04, 3.16820992e-03],

[4.66601477e-07, 8.34146192e-08, 7.52743574e-08, 1.89134155e-07,

1.75862976e-06, 3.68233337e-08, 1.27846804e-02]], dtype=float32)}

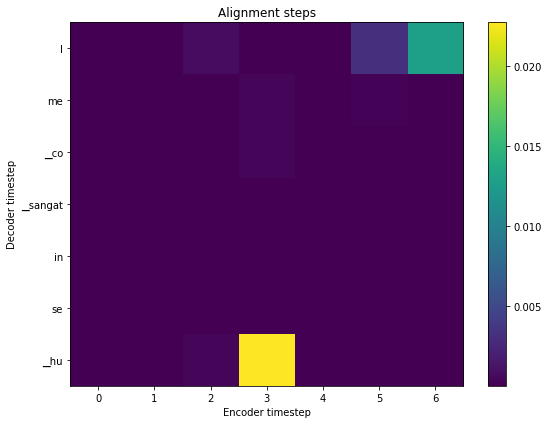

[28]:

fig = plt.figure(figsize=(8, 6))

ax = fig.add_subplot(111)

ax.set_title('Alignment steps')

im = ax.imshow(

results['alignment'].T,

aspect='auto',

origin='lower',

interpolation='none')

ax.set_yticks(range(len(results['subwords'])))

labels = [item.get_text() for item in ax.get_yticklabels()]

ax.set_yticklabels(results['subwords'])

fig.colorbar(im, ax=ax)

xlabel = 'Encoder timestep'

plt.xlabel(xlabel)

plt.ylabel('Decoder timestep')

plt.tight_layout()

plt.show()

The text output not able to align.