Realtime Classification

Contents

Realtime Classification#

Let say you want to cut your realtime recording audio by using VAD after that classify using classification models, you can do that with Malaya-Speech!

This tutorial is available as an IPython notebook at malaya-speech/example/realtime-classification.

This module is language independent, so it save to use on different languages. Pretrained models trained on multilanguages.

This is an application of malaya-speech Pipeline, read more about malaya-speech Pipeline at malaya-speech/example/pipeline.

[1]:

import malaya_speech

from malaya_speech import Pipeline

from malaya_speech.utils.astype import float_to_int

Cannot import beam_search_ops from Tensorflow Addons, ['malaya.jawi_rumi.deep_model', 'malaya.phoneme.deep_model', 'malaya.rumi_jawi.deep_model', 'malaya.stem.deep_model'] will not available to use, make sure Tensorflow Addons version >= 0.12.0

check compatible Tensorflow version with Tensorflow Addons at https://github.com/tensorflow/addons/releases

/Users/huseinzolkepli/.pyenv/versions/3.9.4/lib/python3.9/site-packages/tqdm/auto.py:22: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

torchaudio.io.StreamReader exception: FFmpeg libraries are not found. Please install FFmpeg.

`torchaudio.io.StreamReader` is not available, `malaya_speech.streaming.torchaudio.stream` is not able to use.

`openai-whisper` is not available, native whisper processor is not available, will use huggingface processor instead.

`torchaudio.io.StreamReader` is not available, `malaya_speech.streaming.torchaudio` is not able to use.

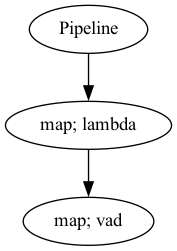

Load VAD model#

Fastest and common model people use, is webrtc. Read more about VAD at https://malaya-speech.readthedocs.io/en/latest/load-vad.html

[2]:

webrtc = malaya_speech.vad.webrtc()

p_vad = Pipeline()

pipeline = (

p_vad.map(lambda x: float_to_int(x, divide_max_abs=False))

.map(webrtc)

)

p_vad.visualize()

[2]:

Starting malaya-speech 1.4.0, streaming always returned a float32 array between -1 and +1 values.

Streaming interface#

def stream(

vad_model=None,

asr_model=None,

classification_model=None,

sample_rate: int = 16000,

segment_length: int = 2560,

num_padding_frames: int = 20,

ratio: float = 0.75,

min_length: float = 0.1,

max_length: float = 10.0,

realtime_print: bool = True,

**kwargs,

):

"""

Stream an audio using pyaudio library.

Parameters

----------

vad_model: object, optional (default=None)

vad model / pipeline.

asr_model: object, optional (default=None)

ASR model / pipeline, will transcribe each subsamples realtime.

classification_model: object, optional (default=None)

classification pipeline, will classify each subsamples realtime.

device: None, optional (default=None)

`device` parameter for pyaudio, check available devices from `sounddevice.query_devices()`.

sample_rate: int, optional (default = 16000)

output sample rate.

segment_length: int, optional (default=2560)

usually derived from asr_model.segment_length * asr_model.hop_length,

size of audio chunks, actual size in term of second is `segment_length` / `sample_rate`.

ratio: float, optional (default = 0.75)

if 75% of the queue is positive, assumed it is a voice activity.

min_length: float, optional (default=0.1)

minimum length (second) to accept a subsample.

max_length: float, optional (default=10.0)

maximum length (second) to accept a subsample.

realtime_print: bool, optional (default=True)

Will print results for ASR.

**kwargs: vector argument

vector argument pass to malaya_speech.streaming.pyaudio.Audio interface.

Returns

-------

result : List[dict]

"""

Check available devices#

[3]:

import sounddevice

sounddevice.query_devices()

[3]:

> 0 MacBook Air Microphone, Core Audio (1 in, 0 out)

< 1 MacBook Air Speakers, Core Audio (0 in, 2 out)

By default it will use 0 index.

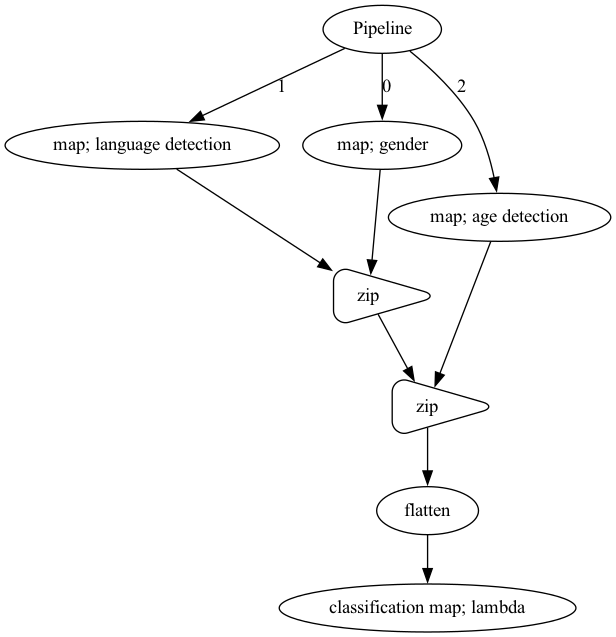

Load Classification models#

In this example, I am going to use 3 different modules, gender detection, language detection and age detection.

[4]:

gender_model = malaya_speech.gender.deep_model(model = 'vggvox-v2')

language_detection_model = malaya_speech.language_detection.deep_model(model = 'vggvox-v2')

age_model = malaya_speech.age_detection.deep_model(model = 'vggvox-v2')

/Users/huseinzolkepli/Documents/server-husein/dev/malaya-speech/malaya_speech/utils/featurization.py:38: FutureWarning: Pass sr=16000, n_fft=512 as keyword args. From version 0.10 passing these as positional arguments will result in an error

self.mel_basis = librosa.filters.mel(

Classification Pipeline#

In this example, I just keep it simple. And needs to end with ``classification`` map or else the streaming interface will throw an error.

[5]:

p_classification = Pipeline()

to_float = p_classification

gender = to_float.map(gender_model)

language_detection = to_float.map(language_detection_model)

age_detection = to_float.map(age_model)

combined = gender.zip(language_detection).zip(age_detection).flatten()

combined.map(lambda x: x, name = 'classification')

p_classification.visualize()

[5]:

Again, once you start to run the code below, it will straight away recording your voice.

If you run in jupyter notebook, press button stop up there to stop recording, if in terminal, press CTRL + c.

[6]:

samples = malaya_speech.streaming.pyaudio.stream(p_vad, classification_model = p_classification,

segment_length = 320)

2023-04-02 19:18:58.270803: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:354] MLIR V1 optimization pass is not enabled

2023-04-02 19:18:58.294652: W tensorflow/core/platform/profile_utils/cpu_utils.cc:128] Failed to get CPU frequency: 0 Hz

(['not a gender', 'not a language', 'teens']) (['male', 'indonesian', 'teens']) (['male', 'malay', 'twenties']) (['male', 'indonesian', 'twenties']) (['male', 'others', 'teens']) (['male', 'malay', 'teens']) (['female', 'not a language', 'fifties'])

[7]:

samples

[7]:

[{'wav_data': array([0.00218571, 0.00169103, 0.00161687, ..., 0.00227111, 0.00199278,

0.00150959], dtype=float32),

'start': 0.88,

'classification_model': ['not a gender', 'not a language', 'teens'],

'end': 2.0},

{'wav_data': array([ 0.0022611 , 0.00253195, 0.002885 , ..., -0.00176323,

-0.00120754, -0.00099424], dtype=float32),

'start': 3.96,

'classification_model': ['male', 'indonesian', 'teens'],

'end': 5.92},

{'wav_data': array([-0.00446817, -0.00548439, -0.00511238, ..., -0.0019373 ,

-0.00055957, -0.0007821 ], dtype=float32),

'start': 9.8,

'classification_model': ['male', 'malay', 'twenties'],

'end': 12.260000000000002},

{'wav_data': array([-9.5548556e-04, -7.1723713e-04, -6.5159990e-04, ...,

4.9564158e-05, 8.1537823e-05, 8.0748869e-06], dtype=float32),

'start': 13.14,

'classification_model': ['male', 'indonesian', 'twenties'],

'end': 16.1},

{'wav_data': array([-0.00077093, -0.00065072, -0.00030474, ..., -0.00551166,

-0.0049879 , -0.00419824], dtype=float32),

'start': 16.12,

'classification_model': ['male', 'others', 'teens'],

'end': 17.48},

{'wav_data': array([ 0.0007841 , 0.00062648, 0.00038873, ..., -0.00136539,

0.00106348, 0.00191391], dtype=float32),

'start': 17.82,

'classification_model': ['male', 'malay', 'teens'],

'end': 19.64},

{'wav_data': array([ 0.00167696, 0.00195421, 0.00150523, ..., -0.00358349,

-0.00352618, -0.00210211], dtype=float32),

'start': 20.1,

'classification_model': ['female', 'not a language', 'fifties'],

'end': 21.540000000000003}]

[8]:

import IPython.display as ipd

import numpy as np

[9]:

ipd.Audio(samples[-1]['wav_data'], rate = 16000)

[9]:

[10]:

ipd.Audio(np.concatenate([s['wav_data'] for s in samples[:5]]), rate = 16000)

[10]: