Long Audio ASR

Contents

Long Audio ASR#

Let say you want to transcribe long audio using TorchAudio, malaya-speech able to do that.

This tutorial is available as an IPython notebook at malaya-speech/example/long-audio-asr-torchaudio.

This module is not language independent, so it not save to use on different languages. Pretrained models trained on hyperlocal languages.

This is an application of malaya-speech Pipeline, read more about malaya-speech Pipeline at malaya-speech/example/pipeline.

[1]:

import malaya_speech

from malaya_speech import Pipeline

from malaya_speech.utils.astype import float_to_int

`pyaudio` is not available, `malaya_speech.streaming.pyaudio` is not able to use.

Load VAD model#

We are going to use WebRTC VAD model, read more about VAD at https://malaya-speech.readthedocs.io/en/latest/load-vad.html

[2]:

vad_model = malaya_speech.vad.webrtc()

[3]:

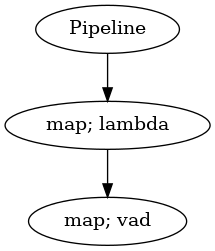

p_vad = Pipeline()

pipeline = (

p_vad.map(lambda x: float_to_int(x, divide_max_abs=False))

.map(vad_model)

)

p_vad.visualize()

[3]:

Starting malaya-speech 1.4.0, streaming always returned a float32 array between -1 and +1 values.

Streaming interface#

def stream(

src,

vad_model=None,

asr_model=None,

classification_model=None,

format=None,

option=None,

buffer_size: int = 4096,

sample_rate: int = 16000,

segment_length: int = 2560,

num_padding_frames: int = 20,

ratio: float = 0.75,

min_length: float = 0.1,

max_length: float = 10.0,

realtime_print: bool = True,

**kwargs,

):

"""

Stream an audio using torchaudio library.

Parameters

----------

vad_model: object, optional (default=None)

vad model / pipeline.

asr_model: object, optional (default=None)

ASR model / pipeline, will transcribe each subsamples realtime.

classification_model: object, optional (default=None)

classification pipeline, will classify each subsamples realtime.

format: str, optional (default=None)

Supported `format` for `torchaudio.io.StreamReader`,

https://pytorch.org/audio/stable/generated/torchaudio.io.StreamReader.html#torchaudio.io.StreamReader

option: dict, optional (default=None)

Supported `option` for `torchaudio.io.StreamReader`,

https://pytorch.org/audio/stable/generated/torchaudio.io.StreamReader.html#torchaudio.io.StreamReader

buffer_size: int, optional (default=4096)

Supported `buffer_size` for `torchaudio.io.StreamReader`, buffer size in byte. Used only when src is file-like object,

https://pytorch.org/audio/stable/generated/torchaudio.io.StreamReader.html#torchaudio.io.StreamReader

sample_rate: int, optional (default = 16000)

output sample rate.

segment_length: int, optional (default=2560)

usually derived from asr_model.segment_length * asr_model.hop_length,

size of audio chunks, actual size in term of second is `segment_length` / `sample_rate`.

num_padding_frames: int, optional (default=20)

size of acceptable padding frames for queue.

ratio: float, optional (default = 0.75)

if 75% of the queue is positive, assumed it is a voice activity.

min_length: float, optional (default=0.1)

minimum length (second) to accept a subsample.

max_length: float, optional (default=10.0)

maximum length (second) to accept a subsample.

realtime_print: bool, optional (default=True)

Will print results for ASR.

**kwargs: vector argument

vector argument pass to malaya_speech.streaming.pyaudio.Audio interface.

Returns

-------

result : List[dict]

"""

Load ASR model#

[4]:

malaya_speech.stt.transducer.available_pt_transformer()

[4]:

| Size (MB) | malay-malaya | malay-fleur102 | Language | singlish | |

|---|---|---|---|---|---|

| mesolitica/conformer-tiny | 38.5 | {'WER': 0.17341180814, 'CER': 0.05957485024} | {'WER': 0.19524478979, 'CER': 0.0830808938} | [malay] | NaN |

| mesolitica/conformer-base | 121 | {'WER': 0.122076123261, 'CER': 0.03879606324} | {'WER': 0.1326737206665, 'CER': 0.05032914857} | [malay] | NaN |

| mesolitica/conformer-medium | 243 | {'WER': 0.12777757303, 'CER': 0.0393998776} | {'WER': 0.1379928549, 'CER': 0.05876827088} | [malay] | NaN |

| mesolitica/emformer-base | 162 | {'WER': 0.175762423786, 'CER': 0.06233919000537} | {'WER': 0.18303839134, 'CER': 0.0773853362} | [malay] | NaN |

| mesolitica/conformer-singlish | 121 | NaN | NaN | [singlish] | {'WER': 0.08535878149, 'CER': 0.0452357273822,... |

| mesolitica/conformer-medium-mixed | 243 | {'WER': 0.122076123261, 'CER': 0.03879606324} | {'WER': 0.1326737206665, 'CER': 0.05032914857} | [malay, singlish] | {'WER': 0.08535878149, 'CER': 0.0452357273822,... |

[6]:

model = malaya_speech.stt.transducer.pt_transformer(model = 'mesolitica/conformer-base')

[7]:

_ = model.eval()

ASR Pipeline#

Feel free to add speech enhancement or any function, but in this example, I just keep it simple.

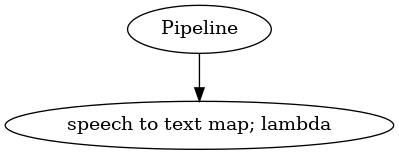

[8]:

p_asr = Pipeline()

pipeline_asr = (

p_asr.map(lambda x: model.beam_decoder([x])[0], name = 'speech-to-text')

)

p_asr.visualize()

[8]:

You need to make sure the last output should named as ``speech-to-text`` or else the streaming interface will throw an error.

Start streaming#

[9]:

samples = malaya_speech.streaming.torchaudio.stream('speech/podcast/2x5%20Ep%2010.wav',

vad_model = p_vad,

asr_model = p_asr,

segment_length = 320)

/home/husein/.local/lib/python3.8/site-packages/torchaudio/io/_stream_reader.py:696: UserWarning: The number of buffered frames exceeded the buffer size. Dropping the old frames. To avoid this, you can set a higher buffer_chunk_size value. (Triggered internally at /root/project/torchaudio/csrc/ffmpeg/stream_reader/buffer.cpp:157.)

return self._be.process_packet(timeout, backoff)

luangkan waktu untuk ikutilah drama tikus ini apabila pangkat menjadi taruhan berapa jabatan pun bermula siapa antara mereka yang berjaya dan siapa pula yang tak ke mana mana dua kali sebagai amanah dalam dua kali episod lepas rashid jalan butuh yang berlubang kita tanya sebab aku banyak kebaikan dan pengetahuannya kita tua dia akan berlalu dengan sia sia tak boleh macam ni ini simple sangat kalau boleh aku nak nampak gempak depan datuk arif tak boleh buat macam ni masuk aku nak tanya ni kau buat apa ni kenapa simpan sangat simple sangat kan aku macam ni simple dapat ini kerja orang pemalas ambil kau aku nak kau aku nak proposal ni nampak gempak gempak bogel ada bas eh apa bendalah aku ada bas kau faham tak nak gempa macam mana lagi jangan nampak macam mana tempat lagi kau tanya aku itu kerja engkau tu kerja engkau aku pergi weh kau telefon lama lagi ke macam biasalah ni sebab apa pula dia kata pun pasal aku tak nampak simple sangat macam tujuh malas selama jah tu kau akulah macam tu siapa tu kena tukar dengan kau dulu kita orang satu beg tapi bezanya aku tak pandai kipas macam dia tu sama sekarang ni semua nak kipas tahu pun assalamualaikum tu asyik ada apa saya tahu pejabat kan ya betul saya dah tak sini sebab nak tolong datuk tolong apa ni saya sampai pagi macam ni mesti datuk tengah fikir nak pakai stokin apa dia kata apa kata mana tahu tak tahu nak pakai topi mana satu dengan kasut yang mana kita tahu satu atuk tak payahlah terkejut tak tahu nak tahu saya ni memang pakar kalau buat tolong orang bagus tak macam tu tahun ni saya tolong saya tak tahulah cakap apa awak ni betul betul ada kagum rashid banyak sudah tua saya akan tolong datuk buat keputusan tempat okey stokin warna kelabu ni kata kita sesuai dengan tu sebab warna kelabu mempengaruhimu seorang kalau dah tu nak tahu warna kelabu ni dikaitkan dengan kemerdekaan sesuai dengan stoking warna merah tu lampau benda tu saya rasa tak sesuai dengan perwatakan datuk sambutan lupa kalau awak saya macam macam pokemon ni sesuai ini melambangkan dia jatuh ni seorang yang ada tak sama okeylah

[10]:

len(samples)

[10]:

23

[11]:

import IPython.display as ipd

import numpy as np

[18]:

samples[4]

[18]:

{'wav_data': array([ 0.0458374 , 0.04632568, 0.0489502 , ..., -0.03302002,

-0.03625488, -0.02584839], dtype=float32),

'timestamp': datetime.datetime(2023, 2, 17, 0, 31, 10, 914959),

'asr_model': 'tak boleh macam ni ini simple sangat kalau boleh aku nak nampak gempak depan datuk arif tak boleh buat macam ni masuk'}

[19]:

ipd.Audio(samples[4]['wav_data'], rate = 16000)

[19]: